ML models today are failing silently and we have no good ways to detect model failures. Consider the debacle with Apple card making sexist credit line decisions or banks' inability to evaluate creditworthiness fueled by the pandemic. Thousands of models are now making key product and business decisions without checks and balances.

As providers of the Verta MLOps platform, we have helped dozens of teams run ML models in production, including teams serving search queries in your favorite message platform. Over the course of partnering with these organizations, we identified three key challenges that make Model Monitoring hard:

First, it is extremely difficult to know when the model is failing. Models degrade over time with data and concept drift and on top of that, unlike regular software model quality feedback is not real-time. Even if we spend a bunch of time and effort on generating the ground truth, the usual quality metrics such as accuracy or precision scores are more nuanced and so the feedback is not always black & white.

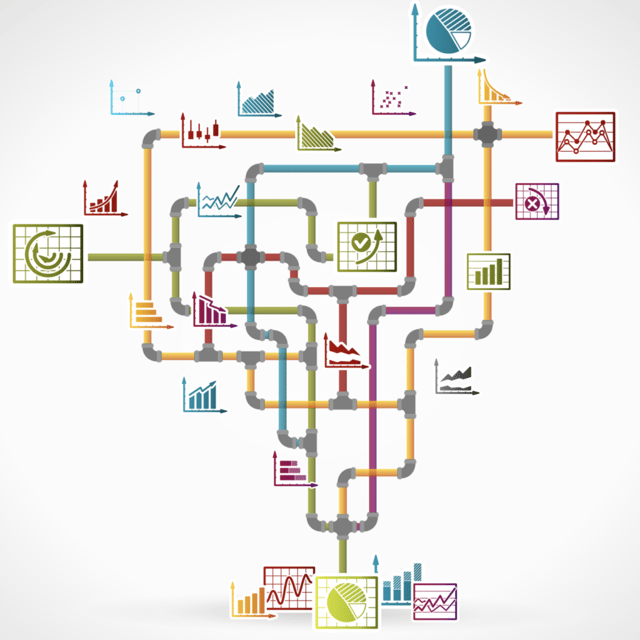

Second, due to the complexity of pipeline jungles that power ML models, root cause analysis for misbehaving models is extremely hard to perform. The Head of Data Science in one of the AdTech companies told us “Our ad-serving system saw a huge revenue loss within a matter of 10 minutes and we had no idea why that happened. We had to dig through all kinds of logs to piece together what had happened”. Complex data pipelines, frequent model releases, and our increasing reliance on off-the-shelf models make debugging challenging.

Third, even when a model failure is detected, remediation is challenging. Mean Time to Recovery suffers because of the sheer complexity of the ML lifecycle with lots of dependencies, fragmented teams, and multiple owners of a pipeline. Having global visibility across key systems with an automated MLOps pipeline to trigger immediate actions like retraining, rollback or human intervention can improve response time. Even better if issues are proactively captured in your pipeline and are not allowed to propagate downstream.

Moreover, traditional application infrastructure monitoring tools (e.g., DataDog, NewRelic) are inadequate for the job. They were not meant to understand data statistics like histograms, skew, kurtosis or to detect data drift. As models power more and more intelligent applications, we need systemic means to monitor the quality of models.

Unveiling Verta Model Monitoring in Community Preview

In the past few months, we have partnered closely with a fabulous set of customers to build a model monitoring solution that solves these hard problems. We’ve taken the best of APM (application performance monitoring) and combined it with the unique needs of ML models to build Verta Model Monitoring.

In particular, Verta Model Monitoring allows you to:

1. Monitor any model, data, or ML pipeline built in any framework (currently Python only)

2. Query, filter, and visualize monitor information via the web UI as well as Python APIs

3. Use out-of-box monitors or define and use your custom functions to monitor your models

4. Link models to experiments, model versions, and model endpoints for full pipeline visibility

5. Close the loop with alerts, notifications, and automated actions

"With Verta Model Monitoring, we have better visibility on how our intermediate data changes over time. Throwing data on an s3 bucket and waiting for a slow day to look at it means it's never going to get attention. But spending 30 extra seconds to log it as part of a data pipeline is well worth it."

- Jenn Flynn, Principal Data Scientist at LeadCrunch

Today, we open Verta Model Monitoring in Community Preview. For ML practitioners as well as academics, get full access to the Verta Model Monitoring capabilities and partner closely with our product teams to integrate monitoring into your state-of-the-art ML models and ML pipelines.

Apply here to be part of our Community Preview program.

Subscribe To Our Blog

Get the latest from Verta delivered directly to you email.