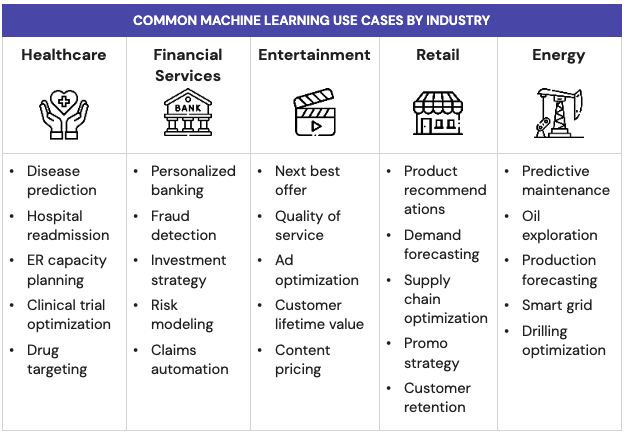

Today’s world is ruled by AI. Every industry has been disrupted by innovative technology that is underpinned by data and machine learning. Consider transportation. Standing on a corner and hailing a cab has been replaced by a mobile app that uses AI-powered intelligence to locate the nearest driver, bring them to your doorstep and chart the optimal path to your destination. In healthcare, wearables like watches now use machine learning to baseline our heart rate and alert us if something looks amiss. And on the factory floor, machine learning predicts equipment failures before they happen, avoiding costly outages. The use cases are endless.

With that in mind, it’s no surprise that machine learning has grown exponentially. According to a recent report, 59% of large enterprises have adopted machine learning, and 90% of those organizations say it’s critical or very important to their operations. The same report also found TensorFlow, R, and Pytorch to be the most critical and widely used open-source ML technologies.

The last mile challenge

(or why 90% of ML projects fail)

At Verta, we focus on helping organizations maximize the value of their ML projects and see these trends unfolding daily. One of the most common patterns is the challenge that many organizations face moving their data science and ML projects from a sandbox environment into production.

It’s estimated that roughly 90% of ML models never see the light of day. This makes sense since most of the innovation in the industry has been spent on the front end of ML. Popular tools like TensorFlow help data scientists rapidly build models. But little has been done to address the last mile challenge of putting models into production — and it turns out that ML model deployment is pretty tricky. Organizations face numerous obstacles at this stage, such as:

- Poorly defined and inconsistent approaches to ML operations

- Differences in software between testing and production environments

- Model performance at scale

- Poor visibility of model performance

- Managing model version control

At Verta, we’re tackling these challenges head-on.

Model deployment made simple

Productionizing ML models doesn’t need to be so hard, we promise. Verta provides an end-to-end platform for MLOps that takes your models from production through deployment and beyond with real-time production monitoring. But for now, let’s focus on deployment.

First off, to gain access to the Verta platform, sign up for a free demo and trial. Next, once you have access to the Verta platform, set up your Python client and Verta credentials.

Deploying a model on Verta requires just two steps: (1) log a model with the right interface and (2) deploy with a click. Unlike services like Lambda or Google Cloud Run, there is no need to worry about resource constraints or making your model fit into them.

As we discussed earlier, Tensorflow is one of the most popular open-source tools for building ML models. We know our customers love using Tensorflow, so we’ve made model deployment a seamless experience. In the remainder of this blog, we’ll walk through the simple steps to getting your Tensorflow models into production.

Deploying a Tensorflow model on Verta

You’ve trained your Tensorflow model and you love it so let’s get it live. As mentioned above, deploying models via Verta is a simple two-step process that only takes a matter of minutes.

Step 1: Create a Tensorflow Endpoint for Easy Deployment

Verta endpoints provide a stable and scalable way to deploy your models into production. The endpoint is a containerized microservice for model serving that allows you to deliver real-time predictions via a REST API or using client libraries.

With Verta.ai, users can create an endpoint using Client.create_endpoint() as follows:

Step 2: Updating the endpoint with a Verta Registered Model Version

Now that your endpoint is set up we need to connect it to your model.

We know tracking and managing models can be a nightmare. That’s why we built the Verta Model Registry as a central place for teams to find, publish, and use ML models. The registry provides model documentation, governance, and versioning to help teams move faster and share their work. Teams working on integrating the model to the production application can use the registry and Verta platform to stay up-to-date on the latest model versions and changes.

Before you can connect your endpoint to your Tensorflow model you need to create a Registered Model Version (RMV) for the model within the registry. There are multiple ways to create an RMV for a Tensorflow model — learn more about it here.

First, for a Keras-Tensorflow model object, users can use the Keras convenience functions to create a Verta Standard Model.

Alternatively, a Tensorflow saved model can be used as an artifact in a model that extends VertaModelBase.

Note that the input and output of the predict function must be JSON serializable. For the full list of acceptable data types for model I/O, refer to the VertaModelBase documentation.

To ensure that the requirements specified in the model version are in fact adequate, you can build the model container locally or part of a continuous integration system. You can also deploy the model and make test predictions as shown below.

And that’s it! The full code for this tutorial can be found here.

Start fast-tracking your ML model deployment

As you’ve seen, deploying and monitoring models in production doesn’t need to be a point of failure or long, drawn-out development cycles.

To deploy models up to 30x faster:

- Learn how you can simplify MLOps with the Verta platform

- Get started with a free demo and trial

Subscribe To Our Blog

Get the latest from Verta delivered directly to you email.