Executives at financially successful organizations are placing a higher level of priority on preparing for compliance with AI regulations than their counterparts at less successful companies, according to the findings from the 2023 AI Regulations Readiness study from Verta Insights.

The study, which collected feedback from more than 300 organizations in the field, examined trends in how organizations are preparing for upcoming AI regulations like the EU AI Act and the American Data Privacy and Protection Act (ADPPA).

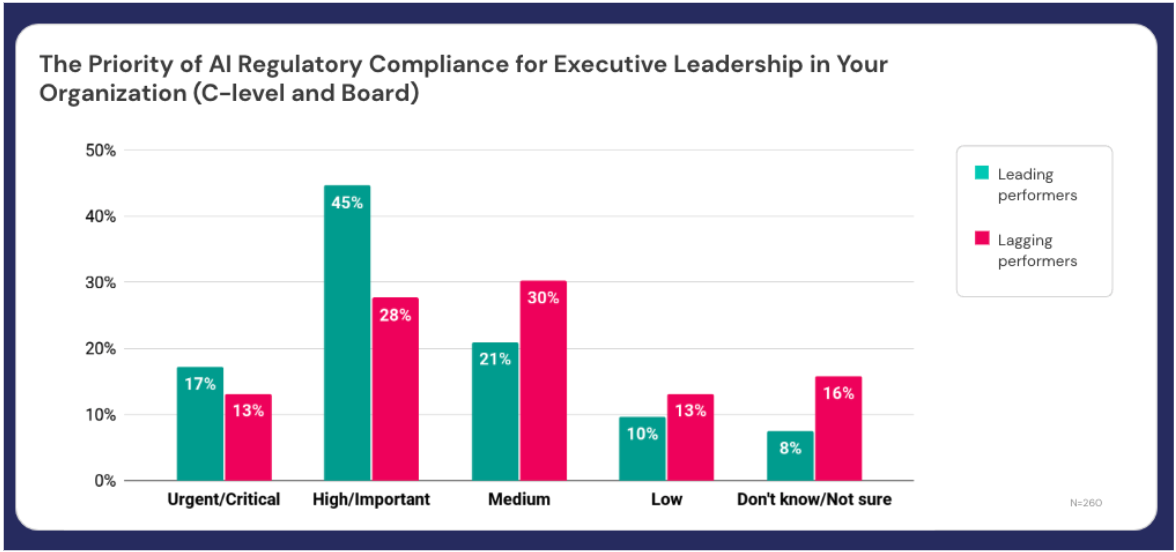

As part of the research study, participants self-reported on how frequently their organizations meet financial targets, allowing for comparisons between “leading performers” (who always or usually meet their targets) and “lagging performers” (sometimes, rarely, never).

Where Leaders and Laggards Differ

Leading organizations were found to be placing a higher level of priority on AI regulatory compliance at the C-level and board level. Nearly two-thirds (62%) of leading performers view compliance as a high or urgent priority, versus 41% of lagging performers.

Moreover, 48% of participants from leading performers reported that compliance is viewed as more important than other priorities, versus 32% of lagging performers.

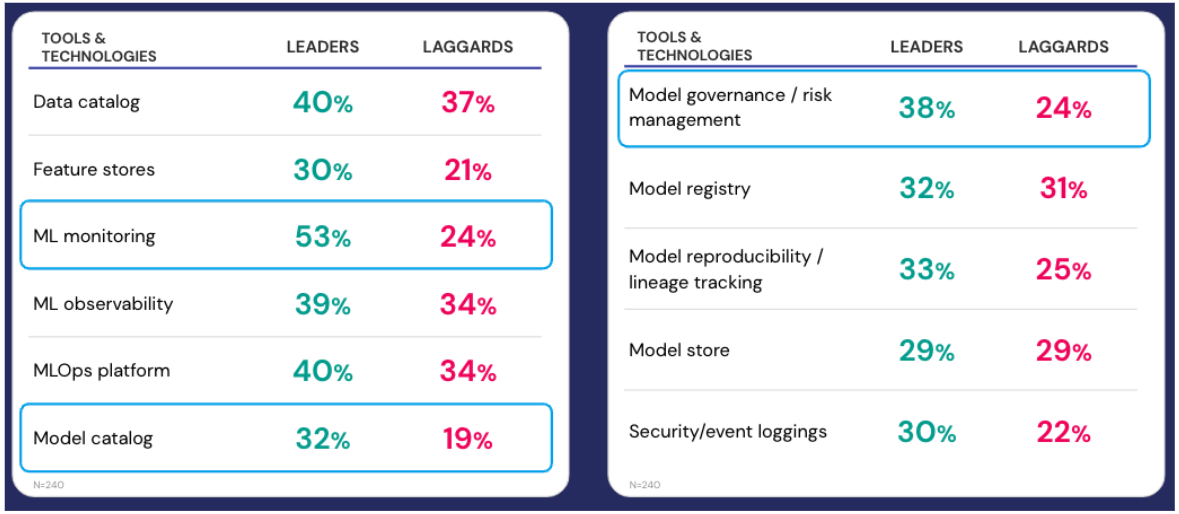

The study also looked at the tooling that companies are investing in to support regulatory compliance. In most categories, the research showed fairly equal levels of adoption between leaders and laggards across the different tools.

However, significant differences were seen in three key areas: ML monitoring tools, model catalogs, and model governance and risk management tools. In these three categories, leaders showed substantially higher levels of adoption.

Verta Insights Perspective

Currently we see many organizations mapping compliance requirements to the tools they already have in place, which is a good place to start. But AI governance will require certain additional tools to be fully effective. Governance is a “full lifecycle” process: Tools for ML monitoring are great, for example, but they come at the end of the cycle.

Organizations must ensure they have governance in place from the very beginning of the cycle to track the complete lineage of the model: Who approved the project at the outset? What kind of ethics and legal reviews were done before the project kicked off? What data was used for this model? Why did you pick this model?

With regulations, organizations will also need to ensure that Responsible AI principles of fairness, transparency, accountability, privacy and safety are embedded in the full lifecycle of the project.

To date, that has not typically been the case, but we expect that to start shifting moving forward. Model risk management teams have had some tools in the past, for example, but they need to evolve to keep pace with all the new kinds of models and AI that are being built today.

To read the complete findings of the study, download the complimentary research report.